Overview

Jonathan Smith: j.p.smith@reading.ac.uk

International Study and Language Institute (ISLI)

This article describes a response to the need to convert paper-based learning materials, designed for use on our predominantly face-to-face summer Pre-sessional programme, to an online format, so that students could work independently and receive automated feedback on tasks, where that is appropriate. A rationale for our approach is given, followed by a discussion of the challenges we faced, the solutions we found and reflections on what we learned from the process.

Objectives

The objectives of the project were broadly to;

- rethink ways in which learning content and tasks could be presented to students in online learning formats

- convert paper-based learning materials intended for 80 – 120 hours of learning to online formats

- make the new online content available to students through Blackboard and monitor usage

- elicit feedback from students and teaching staff on the impacts of the online content on learning.

It must be emphasized that due to the need to develop a fully online course in approximately 8 weeks, we focused mainly on the first 3 of these objectives.

Context

The move from a predominantly face-to-face summer Pre-sessional programme, with 20 hours/week contact time and some blended-learning elements, to fully-online provision in Summer 2020 presented both threats and opportunities to ISLI. We realised very early on that it would not be prudent to attempt 20 hours/week of live online teaching and learning, particularly since most of that teaching would be provided by sessional staff, working from home, with some working from outside the UK, where it would be difficult to provide IT support. In addition, almost all students would be working from outside the UK, and we knew there would be connectivity issues that would impact on the effectiveness of live online sessions. In the end, there were 4 – 5 hours/week of live online classes, which meant that a lot of the core course content had to be covered asynchronously, with students working independently.

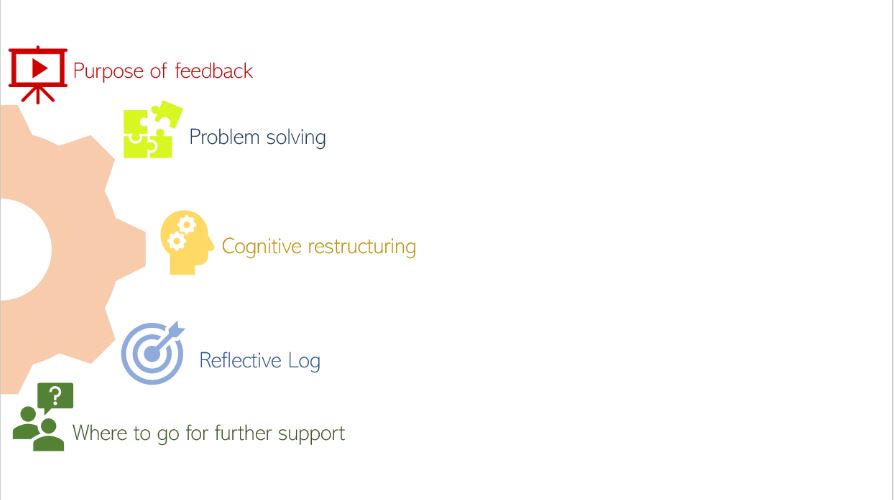

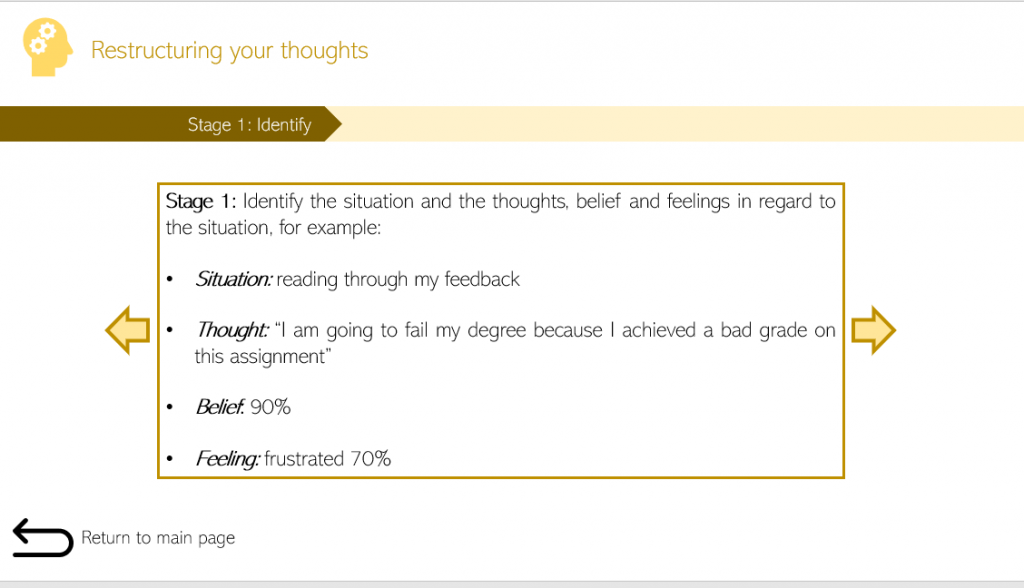

We had been working with Xerte, an open-source tool for authoring online learning materials, for about 3 years, creating independent study materials for consolidation and extension of learning based round print materials. This was an opportunity to put engaging, interactive online learning materials at the heart of the programme. Here are some of the reasons why we chose Xerte;

- It allows for inputs (text, audio, video, images), interactive tasks and feedback to be co-located on the same webpage

- There is a very wide range of interactive task types, including drag-and-drop ordering, categorising and matching tasks, and “hotspot” tasks in which clicking on part of a text or image produces customisable responses.

- It offers considerable flexibility in planning navigation through learning materials, and in the ways feedback can be presented to learners.

- Learning materials could be created by academic staff without the need for much training or support.

Xerte was only one of the tools for asynchronous learning that we used on the programme. We also used stand-alone videos, Discussion Board tasks in Blackboard, asynchronous speaking skills tasks in Flipgrid, and written tasks submitted for formative or summative feedback through Turnitin. We also included a relatively small number of tasks presented as Word docs or PDFs, with a self-check answer key.

Implementation

We knew that we only had time to convert the paper-based learning materials into an online format, rather than start with a blank canvas, but it very quickly became clear that the highly interactive classroom methodology underlying the paper-based materials would be difficult to translate into a fully-online format with greater emphasis on asynchronous learning and automated feedback. As much as possible we took a flipped learning approach to maximise efficient use of time in live lessons, but it meant that a lot of content that would normally have been covered in a live lesson had to be repackaged for asynchronous learning.

In April 2020, when we started to plan the fully-online programme, we had a limited number of staff who were able to author in Xerte. Fortunately, we had developed a self-access training resource which meant that new authors were able to learn how to author with minimal support from ISLI’s TEL staff. A number of sessional staff with experience in online teaching or materials development were redeployed from teaching on the summer programme to materials development. We provided them with a lot of support in the early stages of materials development; providing models and templates, storyboarding, reviewing drafts together. We also produced a style guide so that we had consistent formatting conventions and presentation standards.

The Xerte lessons were accessed via links in Blackboard, and in the end-of-course evaluations we asked students and teaching staff a number of open and closed questions about their response to Xerte.

Impact

We were not in a position to assess the impact of the Xerte lessons on learning outcomes, as we were unable to differentiate between this and the impacts of other aspects of the programme (e.g. live lessons, teacher feedback on written work). Students are assessed on the basis of a combination of coursework and formal examinations (discussed by Fiona Orel in other posts to the T&L Exchange), and overall grades at different levels of performance were broadly in line with those in previous years, when the online component of the programme was minimal.

In the end-of-course evaluation, students were asked “How useful did you find the Xerte lessons in helping you improve your skills and knowledge?” 245 students responded to this question: 137 (56%) answered “Very useful”, 105 (43%) “Quite useful” and 3 (1%) “Not useful”. The open questions provided a lot of useful information that we are taking into account in revising the programme for 2021. There were technical issues round playing video for some students, and bugs in some of the tasks; most of these issues were resolved after they were flagged up by students during the course. In other comments, students said that feedback needed to be improved for some tasks, that some of the Xerte lessons were too long, and that we needed to develop a way in which students could quickly return to specific Xerte lessons for review later in the course.

Reflections

We learned a lot, very quickly, about instructional design for online learning.

Instructions for asynchronous online tasks need to be very explicit and unambiguous, because at the time students are using Xerte lessons they are not in a position to check their understanding either with peers or with tutors. We produced a video and a Xerte lesson aimed at helping students understand how to work with Xerte lessons to exploit their maximum potential for learning.

The same applies to feedback. In addition, to have value, automated feedback generally (but not always) needs to be detailed, with explanations why specific answers are correct or wrong. We found, occasionally, that short videos embedded in the feedback were more effective than written feedback.

Theoretically, Xerte will track individual student use and performance, if uploaded as SCORM packages into Blackboard, with grades feeding into Grade Centre. In practice, this only works well for a limited range of task types. The most effective way to track engagement was to follow up on Xerte lessons with short Blackboard tests. This is not an ideal solution, and we are looking at other tracking options (e.g. xAPI).

Over the 4 years we have been working with Xerte, we had occasionally heard suggestions that Xerte was too complex for academics to learn to use. This emphatically was not our experience over Summer 2020. A number of new authors were able to develop pedagogically-sound Xerte lessons, using a range of task types, to high presentation standards, with almost no 1-to-1 support from the ISLI TEL team. We estimate that, on average, new authors need to spend 5 hours learning how to use Xerte before they are able to develop materials at an efficient speed, with minimal support.

Another suggestion was that developing engaging interactive learning materials in Xerte is so time-consuming that it is not cost-effective. It is time-consuming, but put in a situation in which we felt we had no alternative, we managed to achieve all we set out to achieve. Covid and the need to develop a fully-online course under pressure of time really focused our minds. The Xerte lessons will need reviewing, and some will definitely need revision, but we face summer 2021 in a far more resilient, sustainable position than at this time last year. We learned that it makes sense to plan for a minimum 5-year shelf life for online learning materials, with regular review and updating.

Finally, converting the paper-based materials for online learning forced us to critically assess them in forensic detail, particularly in the ways students would work with those materials. In the end we did create some new content, particularly in response to changes in the ways that students work online or use technological tools on degree programmes.

Follow up

We are now revising the Xerte lessons, on the basis of what we learned from authoring in Xerte, and the feedback we received from colleagues and students. In particular, we are working on;

- ways to better track student usage and performance

- ways to better integrate learning in Xerte lessons with tasks in live lessons

- improvements to feedback.

For further information, or if you would like to try out Xerte as an author and with students, please contact j.p.smith@reading.ac.uk, and we can set up a trial account for you on the ISLI installation. If you are already authoring with Xerte, you can also join the UoR Xerte community by asking to be added to the Xerte Users Team.

Links and References

The ISLI authoring website provides advice on instructional design with Xerte, user guides on a range of page types, and showcases a range of Xerte lessons.

The international Xerte community website provides Xerte downloads, news on updates and other developments, and a forum for discussion and advice.

Finally, authored in Xerte, this website provides the most comprehensive showcase of all the different page type available in Xerte, showing its potential functionality across a broad range of disciplines.