Jo Anna Reed Johnson, Gaynor Bradley, Chris Turner

Overview

This article outlines the re-thinking of how to deliver the science practical elements of the subject knowledge enhancement programme (SKE) due to the impact of Covid-19 in March 2020 and a move online. This focuses on what we learnt from the practical elements of the programme delivery online as it required students to engage in laboratory activities to develop their subject knowledge skills in Physics, Chemistry and Biology related to school national curriculum over two weeks in June and July 2021. Whilst there are some elements of the programme we will continue to deliver online post Covid-19, there are aspects of practical work our students would still benefit from hands on experience in the laboratory, with the online resources enhancing that experience.

Objectives

- Redesign IESKEP-19-0SUP: Subject Knowledge Enhancement Programmes so it was COVID-safe and fulfilled the programme objectives in terms of Practical work

- Redesign how students could access the school science practical work with no access to laboratories. This relates to Required Practical work for GCSE and A’Levels

- Ensure opportunities for discussion and collaboration when conducting practical work

- Provide students with access to resources (posted and shopping list)

Review student perspectives related to the response to online provision

Context

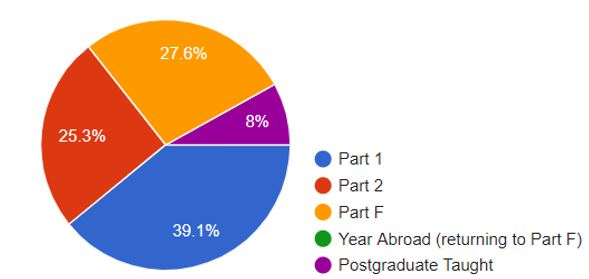

In June 2020 there was no access to the science laboratories at the Institute of Education (L10) due to the Covid pandemic. The Subject Knowledge Enhancement Programme (SKE) is a pre-PGCE requirement for applicants who want to train to be a teacher but may be at risk of not meeting the right level of subject knowledge (Teacher Standard 3) by the end of their PGCE year (one-year postgraduate teacher training programme). We had 21 SKE Science (Physics, Chemistry, Biology) students on the programme, 3 academic staff, one senior technician and two practical weeks to run. We had to think quickly and imaginatively. With a plethora of school resources available online for school practical science we set about reviewing these and deciding what we might use to provide our students with the experience they needed. In addition, in terms of the programme content for the practical weeks we streamlined our provision, as working online requires more time.

Implementation

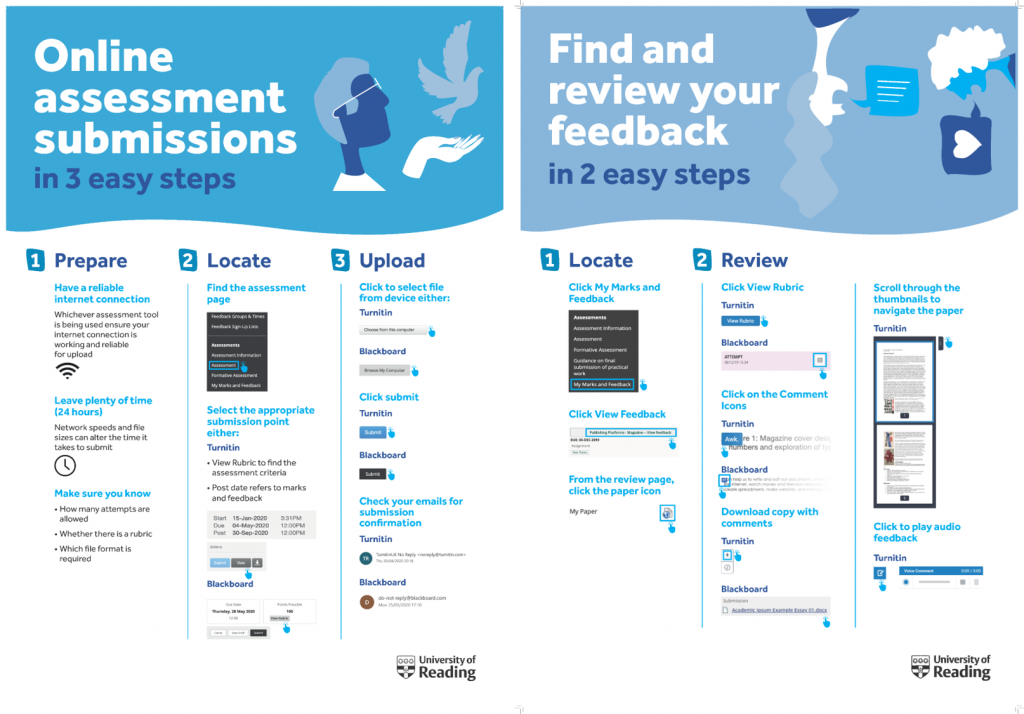

In May 2020, the senior science technician was allowed access to the labs. With a lot of work, she prepared a small resource pack with some basic equipment that was posted to each student. This was supplemented with a shopping list that students would prepare in advance of the practical weeks. For the practical week programme, we focused on making use of free videos that are available on YouTube and the Web (https://www.youtube.com/watch?v=jBVxo5T-ZQM

and https://www.youtube.com/watch?v=SsKVA88oG-M&list=PLAd0MSIZBSsHL8ol8E-a-xgdcyQCkGnGt&index=12).

Having been part of an online lab research project at the University of Leicester I introduced this to students for simulations along with PHET (https://www.golabz.eu/ and https://phet.colorado.edu/).

We also wanted students to still feel some sense of ‘doing practical work’ and set up the home labs for those topics we deemed suitable e.g. heart dissection, quadrats, making indicators.

Powerpoints were narrated or streamed. We set up regular meetings with colleagues to meet in the morning before each practical day started. We did live introductions to the students to outline the work to be covered that day. In addition, we organised drop-in sessions such as mid-morning breaks and end of day reviews with the students for discussion. Throughout, students worked in groups where they would meet, discuss questions and share insights. This was through MS Teams meetings/channels where we were able to build communities of practice.

Impact

End of programme feedback was received via our usual emailed evaluation sheet at the end of the practical weeks and end of the programme. 5/21 responses. The overall feedback was that students had enjoyed the collaboration and thought the programme was well structured.

‘I particularly enjoyed the collaborative engagement with both my colleagues and our tutors. Given that these were unusual circumstances, it was important to maintain a strong team spirit as I felt that this gave us all mechanisms to cope with those times where things were daunting, confusing etc but also it gave us all moments to share successes and achievements, all of which helped progression through the course. I felt that we had the right blend of help and support from our tutors, with good input balancing space for us to collaborate effectively.’

Student Feedback initial evaluation

‘I enjoyed “meeting” my SKE buddies and getting to know my new colleagues. I enjoyed A Level Practical Week and found some of the online tools for experimentation and demonstrating experiments very helpful’

Student Feedback initial evaluation

To supplement this feedback, as part of a small-scale scholarly activity across three programmes, we also sent out a MS Form for students to complete to allow us to gain some deeper insights into the transition to online learning. 22/100 responses. For example, the students’ excitement of doing practical work, and the experience of using things online that they could then use in their own teaching practice:

‘…the excitement of receiving the pack of goodies through the post was real and I enjoyed that element of the program and it’s been genuinely useful. I’ve used some of those experiments that we did in the classroom and as PERSON B said virtually as well.’

Student Feedback MS Form

‘…some of the online simulators that we used in our SKE we’ve used. I certainly have used while we’ve been doing online learning last half term, like the PHET simulators and things like that…’

Student Feedback MS Form

The students who consented to take part engaged in four discussion groups (5 participants per group = 20 participants). These took place on MS Teams and this once again highlighted the benefits of the online group engagement, as well as still being able to meet the programme objectives:

‘I just wanted to say, really. It was it was a credit to the team that delivered the SKE that it got my subject knowledge to a level where it needed to be, so I know that the people had to react quickly to deliver the program in a different way…’

Student Feedback Focus Group

There was some feedback that helped us to review and feedback into our programme development such as surprise at how much independent learning there is on the programme, and the amount of resources or other materials (e.g. exam questions).

Reflections

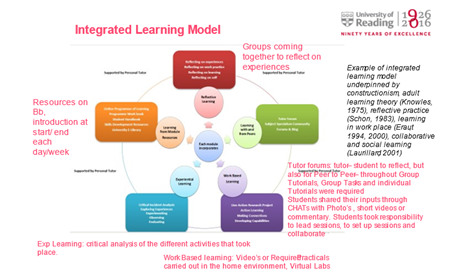

We adopted an integrated learning model:

We learnt that you do not have to reinvent the wheel. With the plethora of tools online we just needed to be selective… asking ‘did the tool achieve the purpose or learning outcome?’. In terms of planning and running online activities we engaged with Gilly Salmon’s (2004) 5 stage model of e-learning. This provides structure and we would apply this to our general planning in the use of Blackboard or other TEL tools in the future. We will continue to use the tools we used. These are useful T&L experiences for our trainees as schools move more and more towards engagement with technology.

However, it was still thought by the students that nothing can replace actually practical work in the labs:

‘I liked the online approach to SKE but feel that lab work face-to-face should still be part of the course if possible. There are two reasons for this: skills acquisition/ familiarity with experiments and also networking and discussion with other SKE students.’ Student Feedback MS Form

Where possible we will do practical work in the labs but supplement these with the online resources, videos and simulation applications. We will make sure that the course structure prioritises the practical work but also incorporates aspects of online learning.

We will continue to provide collaborative opportunities and engage students online for group work and tutorials in future years. We also found the ways in which we collaborated through communities of practice, on MS Teams Channels, was very effective. We set up groups, who continued to work in similar ways throughout the course, who were able to share ideas by posting evidence, then engage in a discussion. Again, this is something we will continue to do so that when our students are dispersed across the school partnership, in different locations, they can still be in touch and work on things collaboratively.

Links and References

Online Lab case Studies

Practical week Free Videos

https://www.youtube.com/watch?v=jBVxo5T-ZQM

https://www.youtube.com/watch?v=SsKVA88oG-M&list=PLAd0MSIZBSsHL8ol8E-a-xgdcyQCkGnGt&index=12).

Impact

Impact