Philippa Cranwell p.b.cranwell@reading.ac.uk, Jenny Eyley, Jessica Gusthart, Kevin Lovelock and Michael Piperakis

Overview

This article outlines a re-design that was undertaken for the Part 1 autumn/spring chemistry module, CH1PRA, which services approximately 45 students per year. All students complete practical work over 20 weeks of the year. There are four blocks of five weeks of practical work in rotation (introductory, inorganic, organic and physical) and students spend one afternoon (4 hours) in the laboratory per week. The re-design was partly due to COVID, as we were forced to critically look at the experiments the students completed to ensure that the practical skills students developed during the COVID pandemic were relevant for Part 2 and beyond, and to ensure that the assessments students completed could also be stand-alone exercises if COVID prevented the completion of practical work. COVID actually provided us with an opportunity to re-invigorate the course and critically appraise whether the skills that students were developing, and how they were assessed, were still relevant for employers and later study.

Objectives

• Redesign CH1PRA so it was COVID-safe and fulfilled strict accreditation criteria.

• Redesign the experiments so as many students as possible could complete practical work by converting some experiments so they were suitable for completion on the open bench to maximise laboratory capacity

• Redesign assessments so if students missed sessions due to COVID they could still collect credit

• Minimise assessment load on academic staff and students

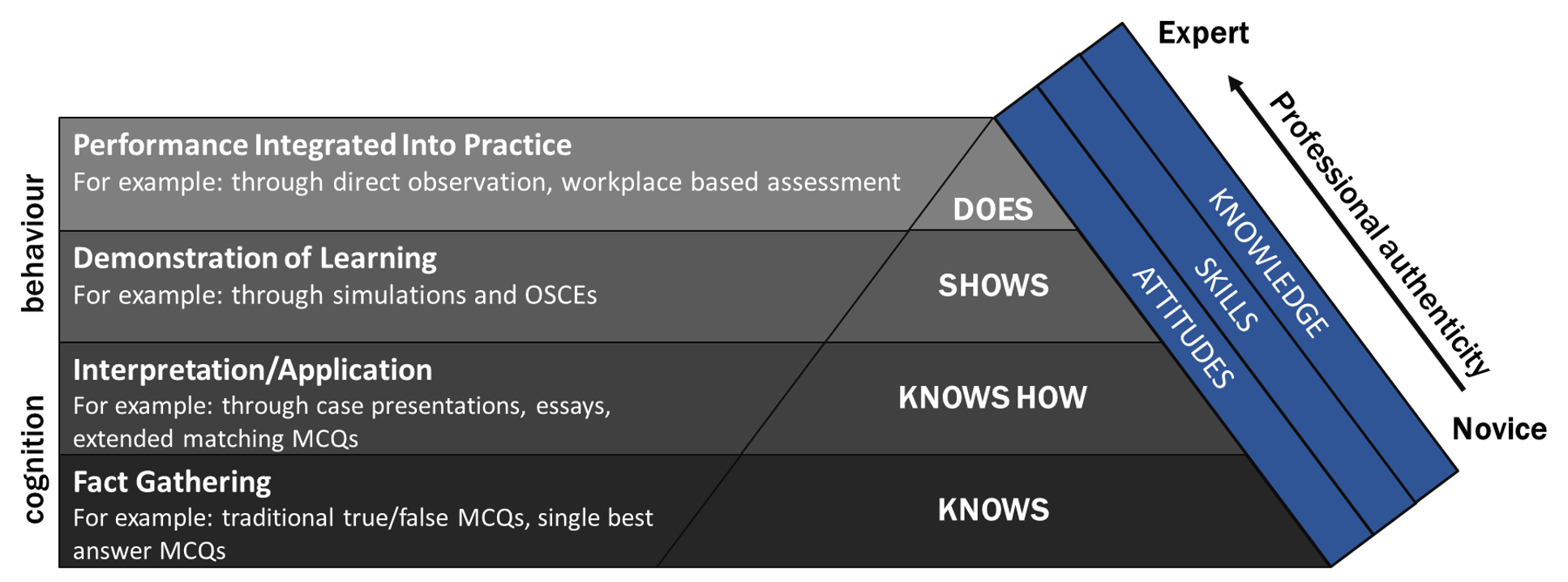

• Move to a more skills-based assessment paradigm, away from the traditional laboratory report.

Context

As mentioned earlier, the COVID pandemic led to significant difficulties in the provision of a practical class due to restrictions on the number of students allowed within the laboratory; 12 students in the fumehoods and 12 students on the open bench (rather than up to 74 students all using fumehoods previously). Prior to the redesign, each student completed four or five assessments per 5-week block and all of the assessments related to a laboratory-based experiment. In addition, the majority of the assessments required students to complete a pro-forma or a technical report. We noticed that the pro-formas did not encourage students to engage with the experiments as we intended, therefore execution of the experiment was passive. The technical reports placed a significant marking burden upon the academic staff and each rotation had different requirements for the content of the report, leading to confusion and frustration among the students. The reliance of the assessments upon completion of a practical experiment was also deemed high-risk with the advent of COVID, therefore we had to re-think our assessment and practical experiment regime.

Implementation

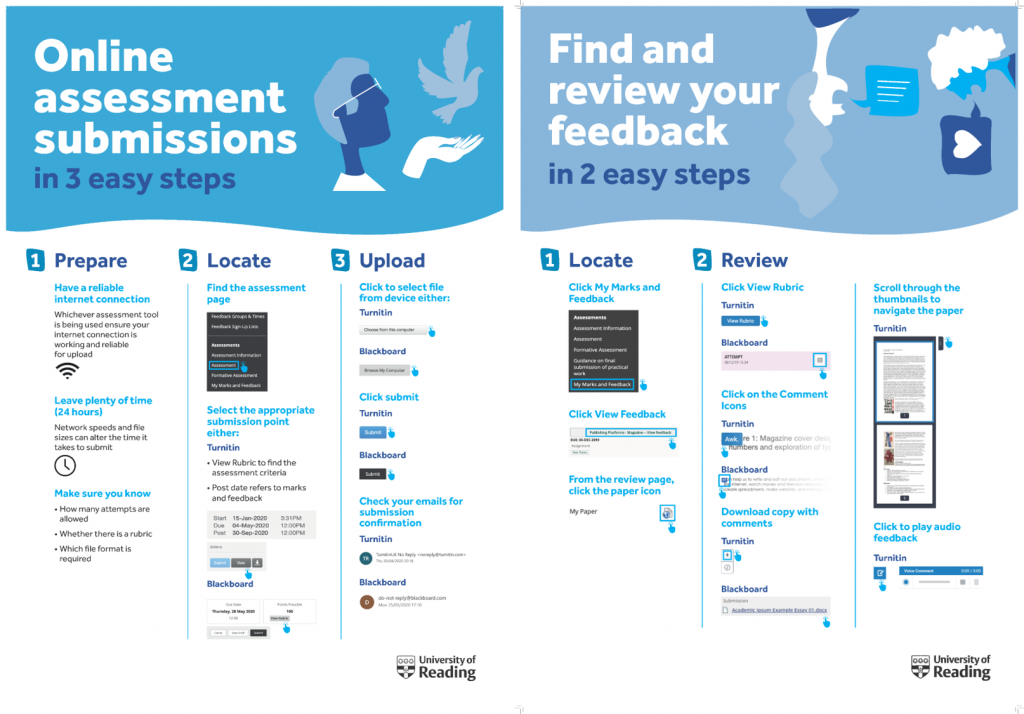

In most cases, the COVID-safe bench experiments were adapted from existing procedures, allowing processing of 24 students per week (12 on the bench and 12 in the fumehood), with students completing two practical sessions every five weeks. This meant that technical staff did not have to familiarise themselves with new experimental procedures while implementing COVID guidelines. In addition, three online exercises per rotation were developed, requiring the same amount of time as the practical class to complete therefore fulfilling our accreditation requirements. The majority of assessments were linked to the ‘online practicals’, with opportunities for feedback during online drop-in sessions. This meant that if a student had to self-isolate they could still complete the assessments within the deadline, reducing the likelihood of ECF submissions and ensuring all Learning Outcomes would still be met. To reduce assessment burden on staff and students, each 5-week block had three assessment points and where possible one of these assessments was marked automatically, e.g. using a Blackboard quiz. The assessments themselves were designed to be more skills-based, developing the softer skills students would require upon employment or during a placement. To encourage active learning, the use of reflection was embedded into the assessment regime; it was hoped that by critically appraising performance in the laboratory students would remember the skills and techniques that they had learnt better rather than the “see, do, forget” mentality that is prevalent within practical classes.

Examples of assessments include: undertaking data analysis, focussing on clear presentation of data; critical self-reflection of the skills developed during a practical class i.e. “what went well”, “what didn’t go so well”, “what would I do differently?”; critically engaging with a published scientific procedure; and giving a three-minute presentation about a practical scientific technique commonly-encountered in the laboratory.

Impact

Mid-module evaluation was completed using an online form, providing some useful feedback that will be used to improve the student experience next term. The majority of students agreed, or strongly agreed, that staff were friendly and approachable, face-to-face practicals were useful and enjoyable, the course was well-run and the supporting materials were useful. This was heartening to read, as it meant that the adjustments that we had to make to the delivery of laboratory based practicals did not have a negative impact upon the students’ experience and that the re-design was, for the most part, working well. Staff enjoyed marking the varied assessments and the workload was significantly reduced by using Blackboard functionality.

Reflections

To claim that all is perfect with this redesign would be disingenuous, and there was a slight disconnect between what we expected students to achieve from the online practicals and what students were achieving. A number of the students polled disliked the online practical work, with the main reason being that the assessment requirements were unclear. We have addressed by providing additional videos explicitly outlining expectations for the assessments, and ensuring that all students are aware of the drop-in sessions. In addition, we amended the assessments so they are aligned more closely with the face-to-face practical sessions giving students opportunity for informal feedback during the practical class.

In summary, we are happy that the assessments are now more varied and provide students with the skills they will need throughout their degree and upon graduation. In addition, the assessment burden on staff and students has been reduced. Looking forward, we will now consider the experiments themselves and in 2021/22 we will extend the number of hours of practical work that Part 1 students complete and further embed our skill-based approach into the programme.

Follow up

Impact

Impact