Will Warley and Allan Laville

Overview

This project aimed to provide Trainee Psychological Wellbeing Practitioners (PWPs) on the MSci Applied Psychology (Clinical) course with guides containing evidence-based techniques and strategies to manage their wellbeing and workload over the course of their placement year. Here we reflect on the benefits of completing this project as well as the potential benefits of these materials for future trainee PWPs.

Objectives

- To create guides which would support the wellbeing and workload of trainee PWPs on the MSci programme.

- To evaluate the impact of these materials via feedback from current trainee PWPs on the MSci Programme.

Context

In their third year, students on the MSci Applied Psychology (Clinical) course train as PWPs. PWPs are trained to assess and treat a range of common mental health problems and work predominantly in Improving Access to Psychological Therapies (IAPT) services. Research indicates that wellbeing is poor among PWPs working in IAPT services; Westwood et al. (2017), in a survey of IAPT practitioners, found that 68% of PWPs were suffering from ‘problematic levels of burnout’. Given the prevalence of burnout among PWPs, it is critical that trainee PWPs are equipped with effective, evidence-based strategies to manage their wellbeing.

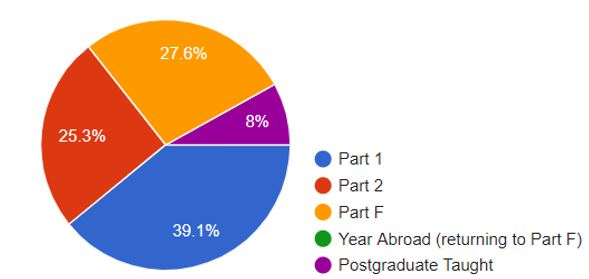

Will Warley (4th year student – MSci Applied Psychology) approached Allán Laville (Dean for Diversity and Inclusion & MSci Applied Psychology (Clinical) Course Director) about creating wellbeing guides for trainee PWPs.

Implementation

The preliminary stage of the project involved reflecting on the challenges which trainee PWPs face during their PWP training. As I had just completed my PWP training, I was in a good position to understand the challenges which are faced by trainees. These included: balancing university, placement and part-time work; ‘switching off’ after placement days; managing stress; organisation (in placement and in university); and clinical challenges, such as having a client not recover after treatment.

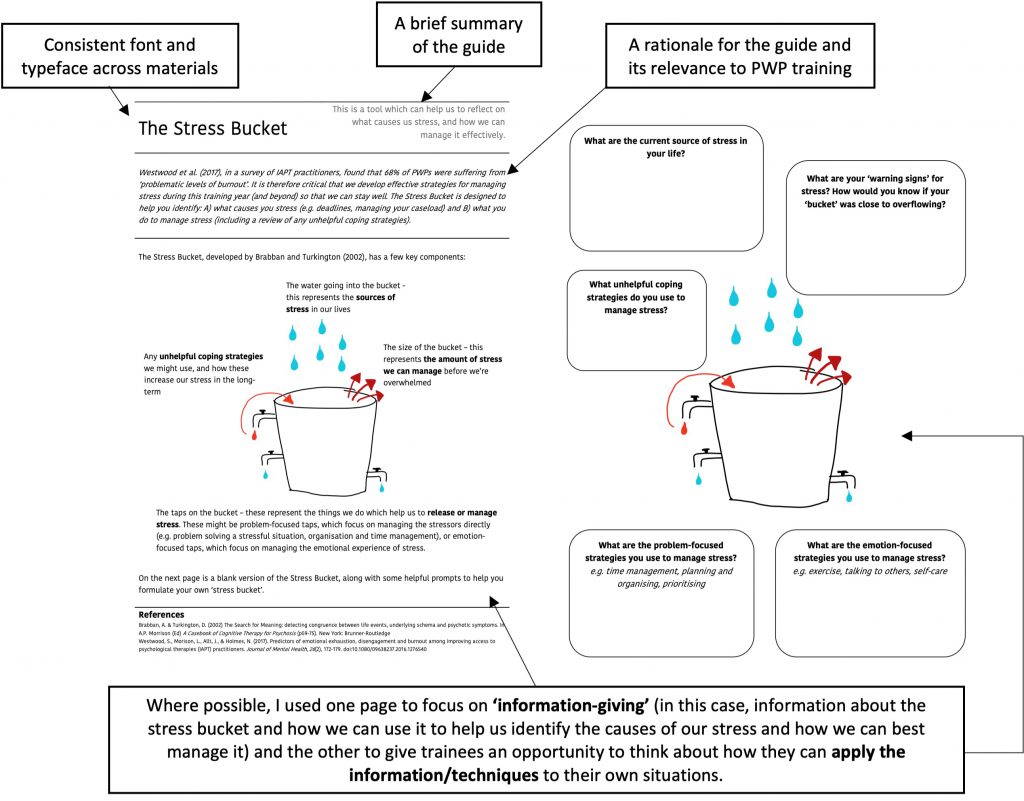

After reflecting on these challenges, I identified evidence-based techniques which could help students to overcome these challenges and tailored them to trainee PWPs. Using evidence-based techniques, underpinned by a well-founded model (such as Cognitive Behavioural Therapy), is important as techniques/strategies which lack evidence may be ineffective and, worse, could have the potential for harm.

A consistent approach to the design of the guides was adopted. This is highlighted below in the annotated ‘Stress Bucket’ guide:

Impact

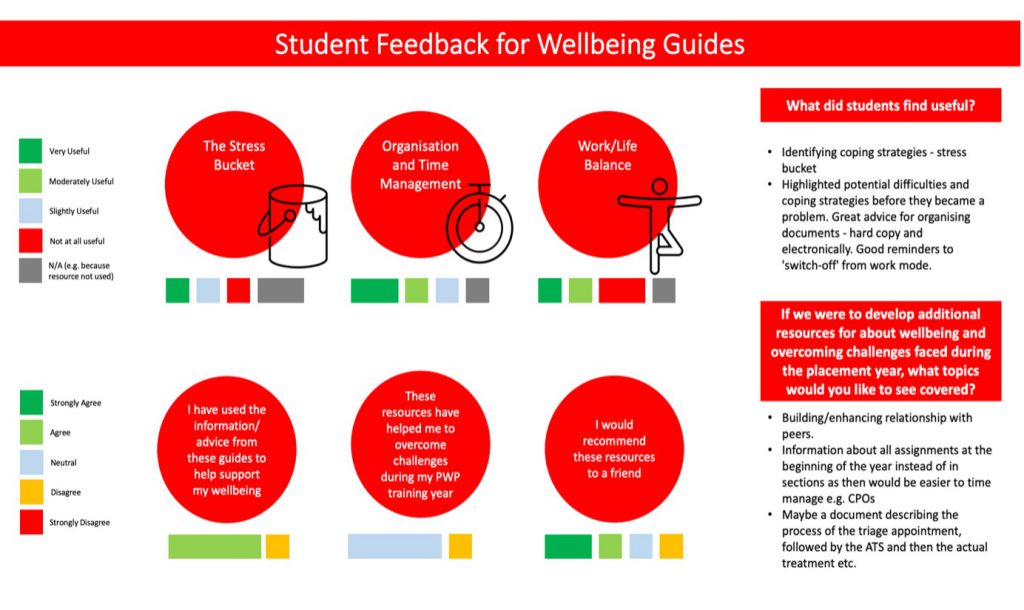

Current trainee PWPs were asked to provide anonymous feedback 6 weeks after being presented with the wellbeing resources. Their responses are summarised in the infographic below (N.B. one response was excluded from the final data as the respondent identified that they had not used any of the resources).

The small number of respondents (N=5) limits the overall utility of the feedback. However, it was promising to see that 4/5 of respondents had used the information/advice to support their wellbeing.

Reflections

Allán Laville’s reflections:

It is really encouraging to see how students have used the resources and that they would recommend the resources to a friend. From previous cohorts, we know that the level of clinical work increases in Spring and Summer term, so it will be very useful to collect further feedback later in the academic year.

Will Warley’s reflections:

Prioritising one’s wellbeing is critical for clinicians at any stage of their career, but it is particularly important during training where many challenges are faced for the first time. It’s therefore very positive to see that the MSci trainees have found these resources helpful in supporting their wellbeing during their training year so far.

Follow up

The qualitative feedback from students highlights potential implications for similar wellbeing-related projects across the university. For example, one student highlighted that it was helpful to know potential difficulties and coping strategies ‘before they became a problem’. It may therefore be helpful for future projects to start by using existing data (e.g. from module feedback) and questionnaires to fully understand the challenges faced by students on that particular course, and then use this information to identify appropriate strategies students can use to overcome these challenges.

Current MSci students will be presented with the final two wellbeing guides at the start of the Spring term. These resources will also be presented to future Part 3 MSci students in order to support their wellbeing during their PWP training year.

Links and References

Westwood, S., Morison, L., Allt, J., & Holmes, N. (2017). Predictors of emotional exhaustion, disengagement and burnout among improving access to psychological therapies (IAPT) practitioners. Journal of Mental Health, 26(2), 172-179. doi:10.1080/09638237.2016.1276540